'Harry Potter as Balenciaga' Ad Made by AI is Creating Waves: What is Deepfake and How Does it Work?

views

It’s uncanny. It’s fascinating. It’s the new scene – a popular culture welcoming the age of artificial intelligence. This past week, an advertisement created by a Patreon user, demonflyingfox, of a French luxury brand Balenciaga with famed Harry Potter characters using AI deepfake, has caught the fancy of the internet.

Check out the ad below:

My guilty secret is that I am enjoying this Midjourney experiment more than I should. Balenciaga X Harry Potter catwalk pic.twitter.com/KZ8LIdekcC— Julian Caraulani (@JulianCaraulani) March 23, 2023

The video is titled “Harry Potter by Balenciaga.” It features deepfaked vocals based on the original cast, set to a pounding electronic beat.

Snape is seen in a chiselled, leather jacket and turtleneck, Dumbledore wears a wide-brimmed leather hat and blacked-out round sunglasses. “To the well-organised mind, Balenciaga is but the next great adventure,” deepfake Balenciaga Dumbledore says, a twist to his original dialogue, “to the well-organised mind, death is but the next great adventure.”

The video even ends with model-like Harry, with his chiseled cheekbones, spouting – Avada Balenciaga – a spin on the dark killing curse of the Harry Potter universe.

But what’s truly fascinating is how believable the ad seems. “You just created a 2 milion dollar add for probably less then 10 bucks,” said one user.

And others are truly mesmerised by how well put-together the ad is. “The cheeckbones(sic), the soundtrack, the fashion, the hairstyle and Hugo Weaving as Balenciaga Dumbledore. What an iconic masterpiece,” said another user on YouTube.

“I think it’s addicting to watch because of the backbeat being almost hypnotic in pattern. Matched with the surreal look of the characters you can’t help but just stare,” commented a user, while another talked about how they had gone on 45 minute walk with the video on loop.

Now, other videos carrying on the trend are coming up in overdrive, so, the trend is evident. But let’s understand how the video was even created, what deepfake is, and what this ‘masterpiece’ could indicate for AI-generated imagery in the future:

How Did Deepflyingfox Create the Video?

The ad even caught the attention of Elon Musk, who commented on the video with two fire emojis.

“I’m constantly brainstorming which combinations and mash-ups of popular media might work,” demonflyingfox told Dazed.

“I quickly realised these have to be as unexpected as possible, but still make sense. With his innocent and naive vibe, it worked surprisingly well to put Harry in an adult, cold-world scenario. I already put Harry in the Yakuza world so it was only a matter of time till I’d think of the fashion bubble… and the most memeable company is probably Balenciaga right now,” he says.

According to a report by Futurism, AI-generated graphics is currently enjoying a major moment in mainstream culture. An AI image of Pope Francis wearing a Balenciaga-style white papal jacket went viral earlier this month, with many people not aware the image was fake.

The AI-based ‘deepfaking’ has generated much buzz, with people wondering how it’s even done. As per the Guardian, deepfakes are the twenty-first century’s equivalent to Photoshopping, which employ a type of artificial intelligence known as deep learning to create photographs of fictitious events, hence the name deepfake.

How are These Made?

Deepfakes first appeared in 2017 when a Reddit user with the same name posted doctored porn movies on the site. Celebrities such as Gal Gadot, Taylor Swift, Scarlett Johansson, and others were transformed into porn performers in the videos, says the Guardian report.

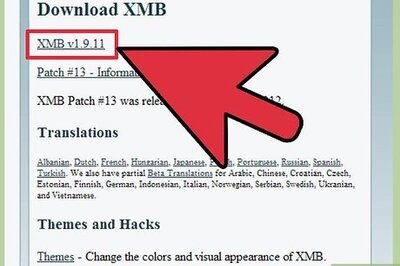

A face-swap video is made in a few steps. First, you put millions of images of the two people’s faces through an AI system known as an encoder. The encoder detects and learns similarities between the two faces, reducing them to their shared common features while compressing the images. After that, a second AI algorithm known as a decoder is trained to extract the faces from the compressed images.

Due to the differences in the faces, you train one decoder to recover the first person’s face and another decoder to retrieve the second person’s face. Simply feed encoded photos into the “wrong” decoder to achieve the face swap. A compressed image of person A’s face, for example, is input into the decoder trained on person B. The decoder then reconstructs person B’s face using the expressions and orientation of face A. This must be done on every frame for a compelling video.

A generative adversarial network, or Gan, is another method for creating deepfakes. A Gan competes between two artificial intelligence algorithms. The generator algorithm is given random noise and converts it into an image. This synthetic image is then added to a stream of real photos – say, of celebrities – that are given into the discriminator, the second algorithm. At initially, the synthetic images will not resemble faces. Yet, if the procedure is repeated multiple times with performance feedback, both the discriminator and the generator will improve. After enough cycles and feedback, the generator will begin making completely realistic faces of nonexistent superstars, the report explains.

How to Spot a Deepfake?

If your AI dystopia doesn’t include images of the Pope in a Balenciaga puffer, I don’t want it. pic.twitter.com/7rWHyj35nZ— Franklin Leonard (@franklinleonard) March 26, 2023

Just recently, these deepfake images of Pope Francis in a puffer jacket went viral. As people were perplexed with how real the image looked, Time explained in its report on how to spot whether an image is real or ‘deepfaked.’

“If you look closely at the image of the Balenciaga Pope, you can see a few clear indicators of its AI origins,” the report explains. For example – the cross on his chest is held bizarrely upright, with just a white puffer jacket replacing the other half of the chain. His right hand looks to be holding a hazy coffee cup, but his fingers are wrapped around thin air rather than the cup itself. His eyelid blends into his spectacles, which flow into their own shadow, it says.

Sounds pretty similar to spotting a fake Photoshopped image, right?

The Time report explains that AI picture generators are simply pattern-replicators: they’ve learned what the Pope looks like, as well as what a Balenciaga puffer jacket might look like, and they’re able to squeeze the two together seamlessly. They don’t (yet) understand physics, it says, adding that they have no idea why a crucifix shouldn’t be able to float in midair without a chain, or why eyeglasses and the shadow behind them aren’t the same thing. Humans are instinctively able to detect errors that AI cannot in these frequently peripheral regions of an image.

However, the report warns that these methods will quickly go out of date as AI continues to better itself. The only option then that remains, is media literacy.

What are the Consequences of Deepfakes?

There are many potential harmful effects that deepfakes are already bringing in with them. From revenge porn to compromised safety and privacy for women, to images or videos that could even threaten the ‘geopolitical order’ – read footages of world leaders.

It also brings in the new facet of what the Guardian says is a ‘no-trust’ world, where plausible deniability may make it hard to uncover what’s true and what’s not, especially for highly-placed individuals and world leaders.

For example, in 2019, Cameroon’s minister of information dismissed a video, that Amnesty International thought showed Cameroonian military murdering civilians, as ‘false news’.

Read all the Latest Explainers here

Comments

0 comment