views

Social media giant Facebook has said that it will start penalising individuals who repeatedly share misinformation. The company has introduced new warnings that will tell users that repeatedly sharing misinformation could result in their posts being moved lower down in News Feed, reducing the visibility of such posts for other people. Currently, Facebook’s policy is to down-rank individual posts carrying misinformation. The posts are debunked by fact checkers. However, posts can go viral long before they are reviewed by fact checkers, and there wasn’t any measure that could potentially demotivate such users from sharing misinformation. With the new change, Facebook will warn users about the consequences of repeatedly sharing misinformation.

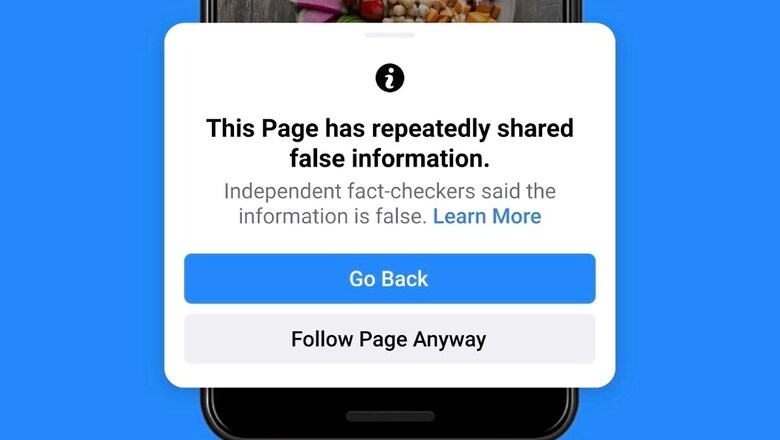

“Whether it’s false or misleading content about COVID-19 and vaccines, climate change, elections or other topics, we’re making sure fewer people see misinformation on our apps,” Facebook said in a blog post. Pages that are considered repeated offenders by Facebook will include pop-up warnings when new users try to follow them, and individuals who repeatedly share misinformation will receive notifications that their posts will be less visible in News Feed as a result. The notifications will also link to the fact check for the post in question, and give users the opportunity to delete the post. Facebook, however, did not mention how many posts it would take for a user or a page to be considered a “repeated” offender, but the company already has a ‘strike’ system for pages that share misinformation.

This comes a year after Facebook last year struggled to control viral misinformation about the COVID-19 pandemic, the 2020 US Presidential Elections, and COVID-19 vaccine.

Read all the Latest News, Breaking News and Coronavirus News here. Follow us on Facebook, Twitter and Telegram.

Comments

0 comment